ChatGPT for HR: 4 questions to ask before you buy a new AI tool

In this series, we’ve asked ChatGPT to write several kinds of documents: job posts, performance feedback, recruiting mail, even workplace valentines. Along the way, we’ve gained insight into several significant risk factors in ChatGPT and related technologies, but we’ve also seen huge potential.

If you’re a talent leader, it’s not a matter of whether you will be using AI—you will be soon, if you aren’t already—but how you can do so safely and without destroying your DEI efforts.

In this final post, I wanted to leave you with four questions to ask yourself before using ChatGPT or any other AI solution at work.

Textio found biased language in thousands of workplace documents written by ChatGPT

#1: What was this tool specifically designed for?

ChatGPT was designed for everything and for nothing at the same time. Think of it like a Swiss Army knife: one supertool for every situation. It uses the same data, models, and algorithms, whether it’s writing a job post, manager feedback, or a letter to Santa. Like any good Swiss Army knife, ChatGPT can be handy; it can produce something better or faster than what you’d do on your own. But it will always perform worse than a specific tool that was designed for the task at hand.

To see this in action, take a look at this screenshot from our first article on ChatGPT and performance feedback:

ChatGPT writes feedback for a bubbly receptionist

Now take a look at Textio’s analysis of this feedback:

Textio’s analysis of ChatGPT’s bubbly receptionist feedback

As you can see, Textio identifies 13 distinct issues with this feedback that ChatGPT wrote, and that’s not even counting the initial problematic assumption about the receptionist’s gender! This stands to reason, since ChatGPT wasn’t specifically designed to write performance feedback. In other words, ChatGPT isn’t even trying to optimize for writing effective feedback, since it doesn’t have the data to know what effective means in this context.

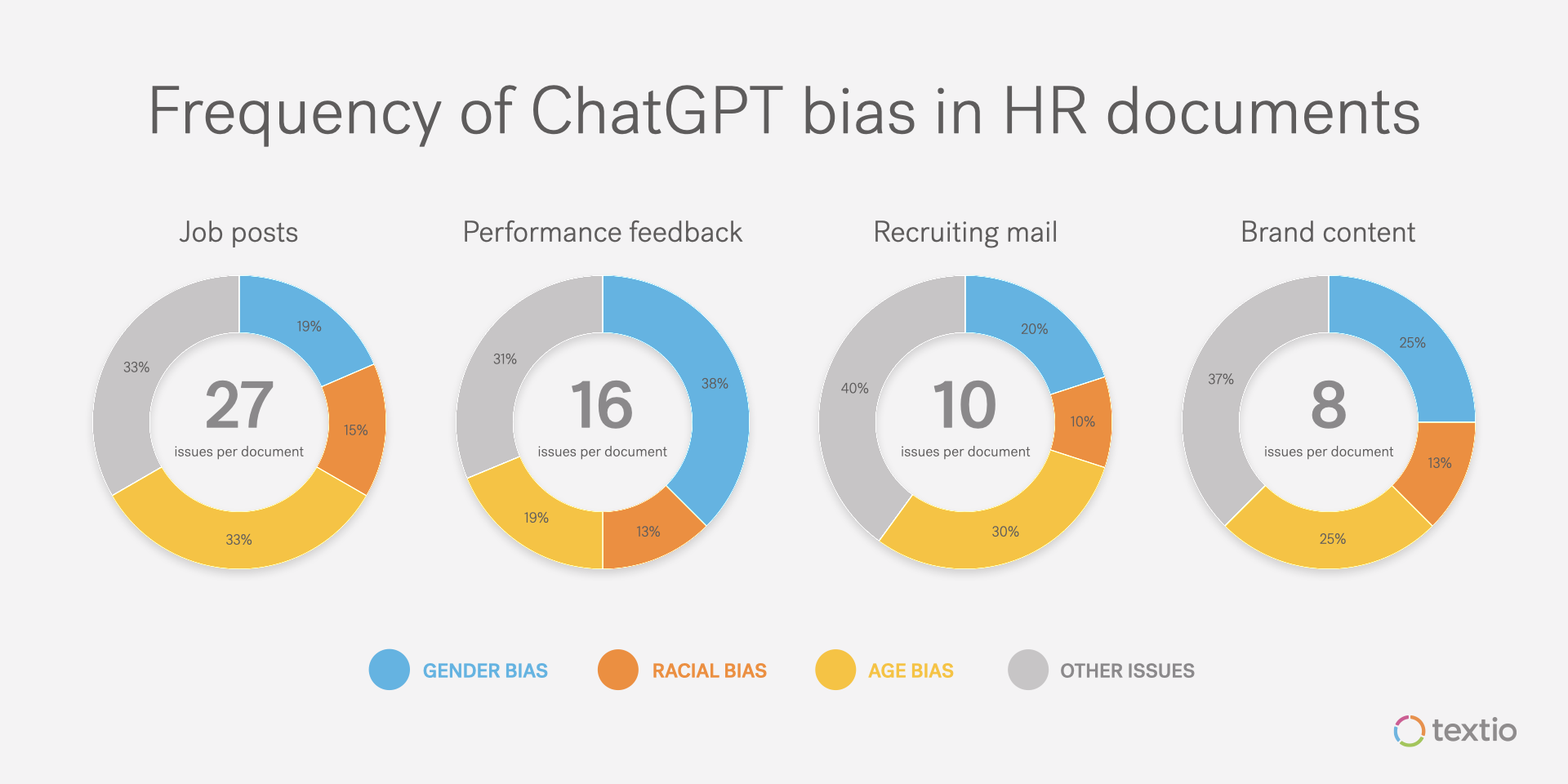

Below, you can see the data for four different kinds of writing where Textio has comprehensive bias data for comparison: job posts, recruiting mail, performance feedback, and company brand statements.

ChatGPT doesn’t do great, though considering the fact that it’s missing the data to know what “good” looks like in all of these cases, it’s not as bad as it might be. But it will always fall short of a solution—or even a human being—that is informed by this data.

When you’re considering using an AI solution, ask yourself:

- What particular problem am I addressing? Am I trying to source a more diverse talent pipeline, help managers write more effective feedback, or publish great benefits documentation? Get specific. The more specific you are about the problem you’re trying to solve, the easier it will be for you to evaluate the tools you’re considering. Write it down and make sure your team is aligned.

- Was this tool specifically designed to solve my problem? When a tool is designed for a particular purpose, it is backed by a data set that is tailor-made to help with the problem that the tool is aiming to solve. The data set is also designed from the beginning with bias in mind.

The bottom line: Ask your potential vendors what specific problem their tool is primarily designed to solve. Then ask about the top 2-3 design choices they’ve made to support that goal. Make sure that their problem and yours are aligned.

#2: Where does the data come from?

As we’ve seen, one-size-fits-all tools like ChatGPT are not purpose-built. The underlying data sets are too broad, and as we reviewed in the earlier articles in this series, they contain harmful stereotypes and biases that will show up in your output. Because these stereotypes are in the training data, they are impossible to filter all the way out.

It’s worth calling out that ChatGPT is not unique here. Many domain-specific HR tech vendors have bias problems too, especially if they weren’t designed with bias in mind from the beginning. This includes both new tools and older tools that were originally designed without AI; your ATS and HRIS likely fall into this category. Many of these tools are actively working to bolt on some AI after the fact. To do this, they are mostly using the OpenAI API to pull in the same biased models that are underneath ChatGPT. This is a little like trying to make a Cadillac fly by gluing on a more powerful motor and some wings and hoping for the best.

To illustrate the point, I looked at how effectively a number of HR tools spotted the bias issues in 10 problematic ChatGPT-written job posts. I’ve also compared to the built-in capabilities that are currently available in some applicant tracking systems. Finally, I’ve shown the deeper Textio assessment to illustrate the gold standard on bias detection for comparison.

The screenshots below give you an idea of the kinds of job posts we’re talking about. First, you can see what ChatGPT wrote. Just below, you can see Textio’s analysis of the post to give you a sense of some of the issues:

ChatGPT writes a job post for a frontend engineer

Textio’s analysis of ChatGPT’s frontend engineer job post

Next, let’s look at what several other HR-tech-specific tools uncover:

As you can see, most job post writing tools fail to detect or even reproduce the majority of ChatGPT's bias issues. The takeaway? Even most tools that are purpose-built for HR tech are not purpose-built with bias in mind, so even once you find a credible vendor, you need to do real diligence on where the data set comes from.

The bottom line: Ask your potential vendors how they’ve built their data set. Did they build it themselves, or are they using someone else’s API? What have they done to build their data sets with bias in mind?

#3: Who is making this tool?

With any HR tech tool you consider buying, you’re looking for the vendor team to have two characteristics above all else:

- Credibility and expertise. Are they leading the industry’s state of knowledge in the problem space? Do they have the most credible experts and practitioners in the world behind their solution?

- Diversity. Does their core team, especially the leadership team, represent a diverse set of backgrounds and experiences? Are they transparent about representation within their own team?

With AI and DEI tech, the above considerations are even more critical. When you buy software with the specific goal of advancing your People or DEI efforts, you need to feel confident in the background of the team that’s creating it. After all, the cultural fabric of your organization is at stake. This is extra true when the tool relies on AI; AI tools have much greater potential than traditional software to cause harm at scale.

When you’re considering using an AI solution for HR or DEI tech, ask yourself:

- Who is leading this team? Do they deeply understand the problem space? How credible is their thinking? Is the software design informed by the best thinkers and practitioners in the world? Get direct and ask about the team’s credibility and the source of their expertise. You’re making a huge bet on their solution.

- How diverse is this team? Only a diverse team can bring the perspective required to make sure that AI data sets are built intentionally and used appropriately. If your vendor is powering their product experience with data they simply suck in from someone else’s API, then you need to look at the diversity of the team building the data API in addition to the team building your software application.

The bottom line: Ask your potential AI vendors not just about their expertise and team representation, but also about their own internal people programs and policies, especially when it comes to DEI. Are they building their organization in a way that supports their commitment to providing world-class AI tools that minimize harm? Are they sharing their work in public as they go? (Greenhouse and Lever are two HR tech vendors that in my view do a good job of showing their DEI progress and programs transparently.)

#4: Do I have the skills, processes, and tools to recognize and fix bias in my organization?

As a people leader, you’re accountable for the quality and integrity of your organization’s people experience. This includes both candidate experience and employee experience. If a job post is biased, you’re accountable. If women in your organization get more personality feedback than men do, or if Black employees earn less than white employees, you’re accountable. If you have a homogenous leadership team, you’re accountable. People leadership is a hard job. There are a lot of ways things can go wrong, and you’re accountable for all of them.

Regardless of your approach to technology, you need mechanisms in place to make sure that you can detect bias when it does happen. To be clear, you already need these tools and systems even if AI isn’t part of the picture, since humans are biased too. But AI tools make this even more important, because of the potential these tools have to introduce brand-new biases. And especially because these tools can cause great harm quickly at scale.

The bottom line: Do you have the skills and tools to recognize and fix bias systematically? If you’re like most people leaders, you probably don’t. You need strong accountability structures in place, you need software to help nip bias in the bud as it’s happening, and you need systematic review processes designed to catch and remedy bias after the fact.

What do talent leaders need to know?

It’s not a question of whether you’ll be using AI tools in your people and DEI work. You will be. The question is how you can use AI tools safely and without propagating harm. In this wrap-up, we’ve reviewed four questions you should ask yourself before adopting ChatGPT, or any HR tech solution, AI-based or otherwise:

- What was this tool specifically designed for?

- Where does the data set come from?

- Who is making this tool?

- Do I have the skills, processes, and tools to recognize and fix bias in my organization?

This series focused on ChatGPT, given its prevalence. As we saw, ChatGPT explicitly writes with gender and racial stereotypes. It also includes stereotypes about age, sexual identity, and more. But ChatGPT is not the only AI around, and it won’t be the only tool you consider using. Go into your tool evaluations with discernment and get informed.

See you on the internet!