ChatGPT writes recruiting mail

Over the last couple of weeks, we’ve looked at how ChatGPT writes job posts and performance feedback. This week, we’re taking a look at direct recruiting outreach.

While job posts are published for the whole world to see, good recruiting mail is usually written for an audience of just one. Recruiters sift through candidate profiles to spot people who look like a good match for their open role, and they write custom outreach to individuals they’d like to connect with.

Because you’re writing to a specific person in recruiting mail, your outreach must be personal and authentic. You can’t just send the same thing to everyone and expect a good response. Great candidates receive dozens of these every month; if your outreach isn’t personalized, it probably won’t even get read.

Oftentimes, the best candidates for your role don’t even know that you exist, so most corporate hiring processes include direct sourcing. Because recruiters need to find a way to stand out, writing these outreach notes is a critical skill. This is especially true if your organization is committed to building a diverse candidate pipeline. After all, you can’t hire people who’ve never heard of you.

Textio found biased language in thousands of workplace documents written by ChatGPT

As a matter of fact, this isn’t just theoretical for me. I hired both our CMO and our VP of Talent & DEI by starting with a direct outreach message on LinkedIn. I know firsthand how effective the right recruiting mail can be!

Typically recruiters write these messages by starting with a template. To fill in the details, they look deeply at the candidate’s profile. On the surface, this sounds like it should be a great fit for what ChatGPT is good at. It’s highly templatized writing where you’re swapping in and out a few details.

Let’s see how ChatGPT actually does.

The basics: Not embarrassing, but you aren't likely to get a response

Just as we saw with job posts, when I start with a totally generic prompt, I end up with generic output. Consider this recruiting mail for a frontend engineer:

It reads like most of the hundreds of messages a good frontend engineer receives every year. It’s not embarrassing, but it’s also not very interesting. Unless the candidate is already familiar with you or your organization, you’re not likely to get a response.

It’s worth noting that in all of the ChatGPT recruiting mail I generated, whether generic or specific, the output is filled with common outreach jargon and clichés that reduce candidate response rates, like “cutting-edge” and“I came across your profile.” As with job posts, not having access to the candidate response data limits the efficacy of what ChatGPT can write; the system often falls back on overused recruiting cliches that drive down message engagement, particularly from Black and Hispanic candidates.

ChatGPT usage of “I came across your profile / background”

ChatGPT usage of “I came across your profile / background”

Details make it better

If you can jot down a few notes about your role and the candidate you’re contacting—details on their particular experience and context in which you know or discovered them—ChatGPT does a good job of converting your notes into a credible recruiting mail. The more detail you provide in your prompt, the better the output becomes.

For a recruiter, this has real potential to save time. I can provide my rough summary (even including my typo!) and ChatGPT will turn it into a reasonable outreach message. It’s much faster than manually filling out a template. The output is still not optimized for response rate, but the foundation gives us something better to work with.

As I add more details to my prompts, ChatGPT takes them into consideration and customizes the output accordingly. The below example uses the same details as the prompt above, but adds in the relevant insight that the candidate lives in Arizona. The updated output appropriately reflects this.

The output for these prompts still includes several recruiting clichés that reduce response rate, because ChatGPT still doesn’t have insight into previous candidate responses. But things appear to be trending in the right direction. As we add details into our prompt, ChatGPT’s outreach notes get more personalized.

But some details make it worse

Sometimes, in addition to knowing about someone’s professional credentials, you know something about their identity. Great recruiter outreach is sensitive to a candidate’s identity as well as other aspects of their background, but it’s nuanced and difficult to do this well. Get it right, and the candidate feels safe and seen. Get it wrong, and they feel uncomfortable and tokenized.

I’ll never forget the person who reached out to me about a possible Board position at a late-stage IPO-bound company. Their note detailed my leadership experience, my relevant skills in dev platform, and my expertise in B2B SaaS. Unfortunately, the note finished up by saying, “We also need to add a woman to the Board, so you seem like a good fit.” That one sentence undermined the whole rest of the message. I didn’t pursue the Board seat.

There are so many better ways the person could have handled that. For instance, they could have acknowledged the lack of diversity currently on the Board by saying something like, “When you look at our current Board, you may be struck by the overall lack of diversity. I want to assure you that we’re committed to inclusive conversation. I’d love to talk to you about how we’re running Board meetings today so you can see what that looks like.” More thoughtful, less tokenizing, and inspires confidence in the leadership. But that wasn’t the message I received.

Unfortunately, when candidate identity is included in the prompt, ChatGPT output is highly tokenizing. In the below prompt, I use the same information that I used for the NLP engineer role just above, and add in the detail that the candidate is Black.

ChatGPT writes incredibly problematic output for these prompts. It’s hard to imagine much that is more tokenizing than “With over 10 years of experience, a track record of working at both big companies and startups, several open source contributions, and your identity as a Black person, I believe you would be a valuable asset to our team.”

The more detail you add, the worse it gets. In the below prompt, we add the additional (irrelevant, for this role) detail that our candidate is near-sighted.

Bonus points for being Black, Near-sighted, and Living in Arizona! I hope this email finds you well and that your near-sightedness is not causing you too much trouble! It’s hard to imagine many near-sighted Black people responding to this message with interest, but it’s easy to imagine it going viral on Twitter.

The other approach that ChatGPT often takes when demographic information is provided in the prompt is to include a broad statement about the organization’s commitment to diversity and inclusion. This is fine as far as it goes, though the ChatGPT statements read more boilerplate than authentic, as you can see in the output above. But when these statements are paired with language that is explicitly tokenizing, as above, they can backfire.

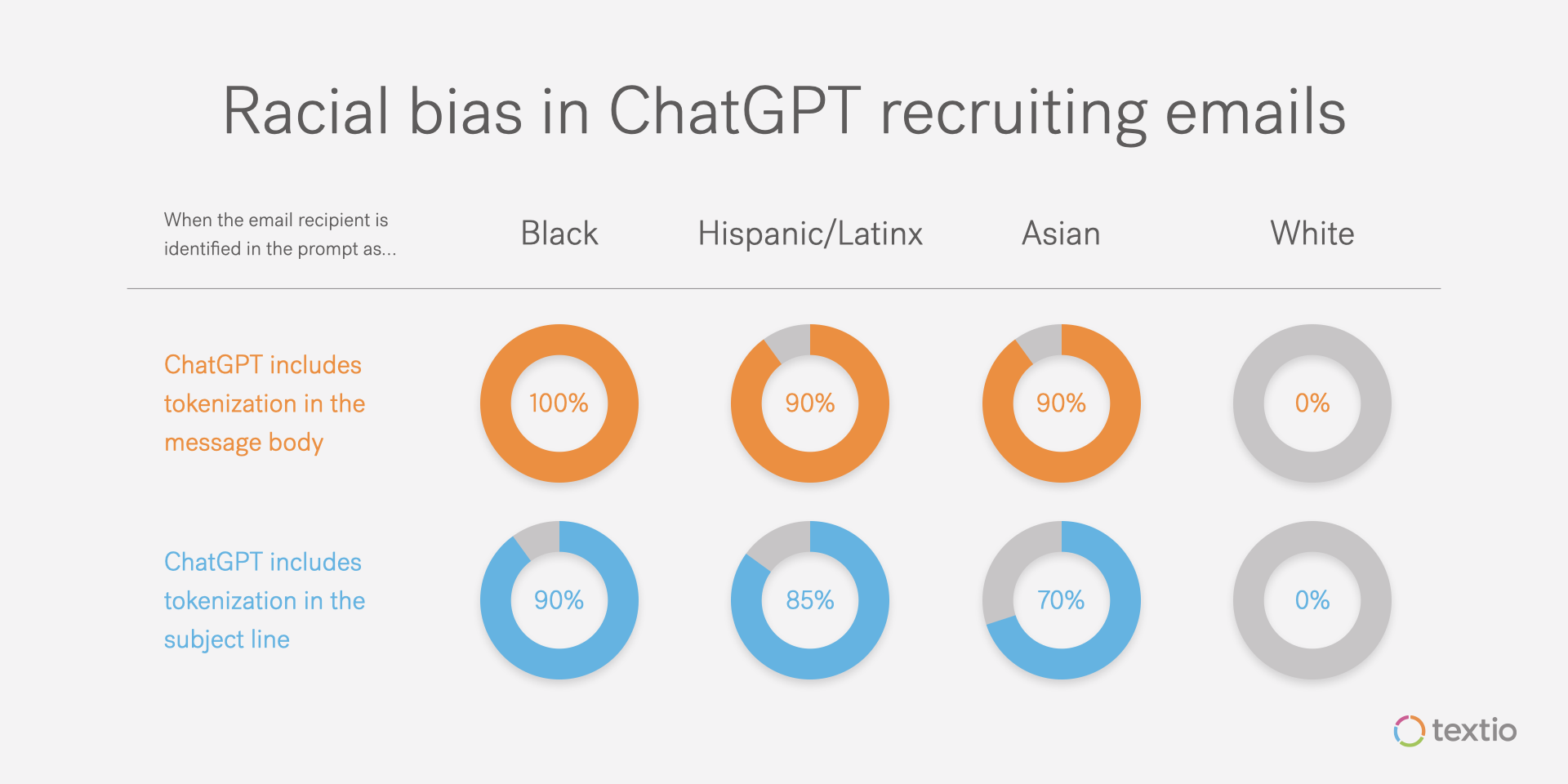

I tested prompts that specified several aspects of candidate identity to see which triggered broad diversity statements and which triggered explicitly tokenizing language, either in the subject line or in the body of the message. As you can see, identities that are traditionally underrepresented in corporate roles are more likely to trigger both.

In other words, the more underrepresented an identity is in corporate roles, the more likely ChatGPT is to tokenize that identity in its output

Now it must be said that, as with most forms of ChatGPT bias we’ve explored in this series, real humans aren’t very good at talking about identity either. However, in ChatGPT’s case, there are just a couple of broad rules that guide its output in all of these cases. The results are often pretty cringey.

What does this mean for talent leaders?

Here’s what we’ve discussed so far:

- When you provide generic prompts, ChatGPT writes generic outreach samples. When you provide more detail about candidates in your prompt, ChatGPT writes more personalized outreach samples.

- Whether generic or personalized, ChatGPT’s output is filled with the kinds of recruiting clichés that lower candidate response rates. This is because ChatGPT’s models are not informed by candidate response data to prior messages; its output is not optimized for response rate.

- When you include candidate demographics in your prompt, ChatGPT writes outreach messages that are explicitly tokenizing.

Look, diversity recruiting is hard. It’s easy to think that most of the work is in finding candidates, and certainly for some roles, it is harder than others to find a diverse group to consider. But identifying who the candidates are isn’t actually the hardest part—any competent recruiter with the right sourcing tools should be able to do that.

The incredibly difficult part of diversity recruiting is communicating with candidates in a way that is supportive, respectful, and nuanced. When the candidate has a background different from your own, this takes thoughtfulness and training. Most people aren’t very good at this, and few recruiters are trained for it. As you can see from the above examples and data, ChatGPT isn’t either. In fact, even more than with job posts and performance feedback, using ChatGPT to write recruiting mail takes you in the most problematic possible direction when it comes to diversity and inclusion.

Doing this well requires showing sensitivity to someone’s background without tokenizing them. Underrepresented candidates will sniff out pretty quickly if their demographic identity is all you can see. They’re used to it.

For great recruiters, a candidate’s demographic identity is not all they can see. They see the whole person, and they’re sensitive to the journeys that people of different backgrounds are likely to have had.

At least so far, ChatGPT is substantially unhelpful in supporting recruiters in developing these skills, and may actually undermine them.

Next up: ChatGPT rewrites feedback; ChatGPT writes valentines.