ChatGPT rewrites feedback

Over the last few weeks, we’ve looked at the bias that shows up in ChatGPT-generated job posts, performance feedback, and recruiting mails. But what happens if you ask ChatGPT to fix the bias in something that’s already been written?

We know that people aren’t very good at spotting bias, especially their own. This week, let’s find out if ChatGPT does any better.

When the prompt itself is biased

In last week’s post on recruiting mail, we saw that, in several cases, ChatGPT accepted prompts that included the candidate’s demographic identity, and wrote output tokenizing that identity. For instance, when I tell ChatGPT that I’m looking for a Black woman, I frequently get output like this:

Sometimes, though, ChatGPT pushes back on the prompt as itself containing bias. In the case below, the prompt specifies that I’m contacting a white male engineer. While ChatGPT does write an output, it adds a caveat about potential bias in the prompt itself, and the output does not include any references to the candidate’s identity:

While the response to these prompts is inconsistent—in some cases ChatGPT accepts the biased prompt, while in others it pushes back—this suggests that ChatGPT has at least some ability to recognize bias.

Textio found biased language in thousands of workplace documents written by ChatGPT

But what about the more common cases where potential bias is less obvious? For instance, when we ask ChatGPT to write feedback based on someone’s personality rather than their work behaviors, ChatGPT doesn’t flag any issues with the prompt. In real workplace feedback, personality feedback is used in predictable and demographically polarizing ways. Accepting biased prompts like the one below perpetuates this cycle:

In other words, ChatGPT flags overt demographic bias in some situations, but most of the time, it doesn’t proactively flag more subtle kinds of bias.

So what happens when we explicitly ask ChatGPT to get more proactive?

ChatGPT recipe for reducing bias: Use a fancier vocabulary

The other day, I was reading a science report written by our sixth grader. It was filled with paragraphs like the one below:

The powdered sugar was combined with the baking soda, and then the pie tin was filled with sand and a small hole was placed in the middle. Then the sand was saturated with lighter fluid so the sugar-baking soda mix could be spooned into the sand.

When I asked her why she was writing in this stilted way, she said, “That’s what makes it sound sciencey!”

ChatGPT’s top approach to removing bias in feedback is similar. When you ask ChatGPT to rewrite feedback with less bias, it mostly rewrites the feedback to be more formal:

For instance, in the above example, ChatGPT responds to the prompt by adding passive verbs (“payroll was always processed on time”) and using more complex phrases rather than single words (“a distant demeanor” rather than “standoffish”). ChatGPT also uses more complex sentence structures with relative clauses (“However, Damien was not actively involved in team events and had a distant demeanor, which impacted his relationship with his colleagues”) and removes contractions like I’d.

Unfortunately, while these strategies make the feedback sound more formal, they don’t do anything to address bias. The rewrite still contains the same personality feedback that was in the prompt. It comments on Damien’s decision not to join team social events, a choice that is outside Damien’s job responsibilities. Most crucially, the output still doesn’t contain any actionable examples, which is one of the biggest hallmarks of problematically biased feedback.

Notably, when you ask ChatGPT to remove bias from written feedback, its output makes the feedback much less clear. Sentences get longer and include more complex syntax, and the vocabulary gets more convoluted. On the whole, ChatGPT rewrites are harder to follow and the original feedback becomes less rather than more clear. Far from reducing bias, this actually leaves more surface area for bias to flourish. Because as my sixth grader would say, it sounds sciencey.

Rewriting feedback to make it sound more formal doesn’t address the bias within it. Writing “I am impressed” rather than “I’m impressed” and using the word exhibit rather than show doesn’t make your feedback more objective.

Personality feedback by any other name

In real-world performance assessments, personality feedback is one of the most common sources of bias. In written feedback, women receive 22% more personality feedback than men do. And the type of personality feedback that people receive varies significantly with their race, gender, and age.

We saw in our earlier post on performance feedback that ChatGPT takes prompts with personality comments and consistently turns them into written personality feedback. So perhaps it’s no surprise that ChatGPT rarely removes personality comments when asked to remove bias from written feedback.

It does, however, rewrite the personality feedback to be more formal and official-sounding. Consider the examples of Joya and Franklin below:

The rewrites are more formal. They also include the same personality feedback that was in the prompts.

While ChatGPT generally does not remove personality feedback in its rewrites at all, it does a slightly better job with handling negative personality feedback than positive personality feedback.

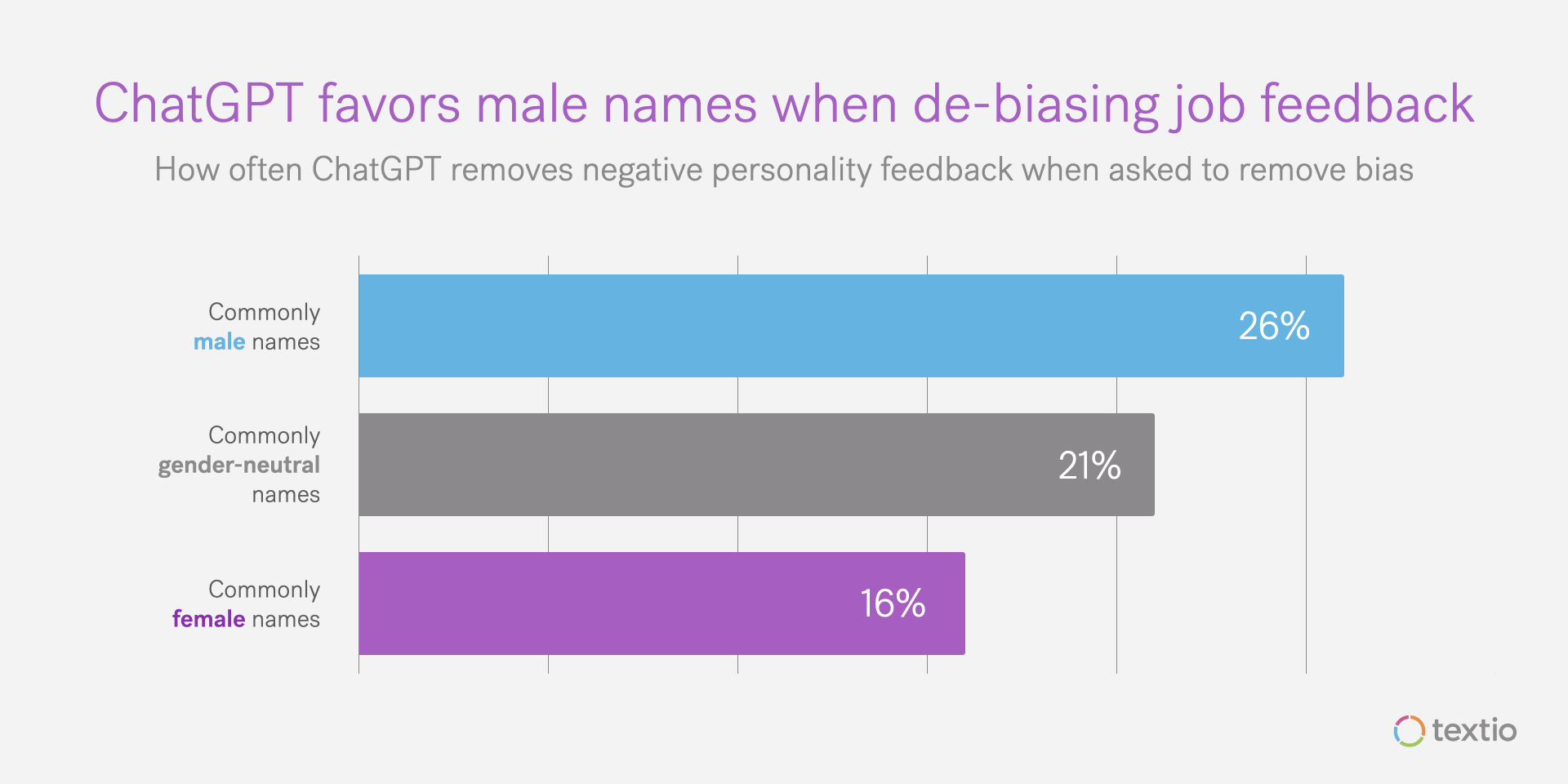

But just as we saw in our first post on performance feedback, the patterns we see in ChatGPT are gendered. When the person receiving feedback has a conventionally female name, negative personality feedback is more likely to show up in the output, and positive personality feedback more likely to be removed.

But just as we saw in our first post on performance feedback, the patterns we see in ChatGPT are gendered. When the person receiving feedback has a conventionally female name, negative personality feedback is more likely to show up in the output, and positive personality feedback more likely to be removed.

What this means for talent leaders

What this means for talent leaders

Here’s what we’ve discussed so far:

- ChatGPT flags certain demographic bias in some prompts, though the behavior is inconsistent

- When ChatGPT is asked to rewrite feedback with less bias, it makes the feedback more formal rather than less biased

- ChatGPT shows gender bias in how it handles personality feedback; feedback written about people with conventionally female-sounding names is more likely to include negative personality feedback and to have personality feedback removed

Talent management leaders have grappled forever with the fact that people show tremendous bias. This shows up in a variety of settings in the workplace—in who gets invited to lunch, who feels comfortable to speak up in meetings, who gets which project opportunities, and much more. These biases are especially problematic in settings where decisions have meaningful consequences for someone’s career: in documented written feedback, in performance reviews, and in compensation and promotion decisions.

Managers commonly fall back on formal communication styles when they’re offering feedback, especially critical feedback. It’s easy to confuse speaking formally with speaking objectively, but these aren’t the same thing; sounding sciencey doesn’t make you a scientist. If anything, taking an overly formal tone only serves to cover up biased feedback. Personality feedback that’s written with longer words and sentences is still personality feedback. It’s just harder to follow.

When you ask ChatGPT to remove bias from written feedback, it falls back on the same strategies that people often do; it rewrites feedback to be more formal, not necessarily less biased. Statistically, this disadvantages the demographics that are more likely to receive personality feedback in the first place: women and Black and Hispanic people in particular.

Like people, ChatGPT confuses formal writing with less biased writing.

Next up: ChatGPT writes valentines